In the vast realm of blogging, search engine optimization (SEO) plays a crucial role in driving organic traffic to your website. One often overlooked aspect of SEO is the Robots.txt file, which acts as a guide for search engine crawlers. By optimizing your Robots.txt file, you can enhance your blogger's SEO performance and witness a remarkable boost of up to 96%.

|

In this article, we will provide you with a step-by-step guide on how to create the perfect Robots.txt file for your Blogger, empowering you to dominate search engine rankings and attract a wider audience.

Here are the important topics to be covered in this article. Please review them:

Table of Contents

Are you thinking that I don't have any technical knowledge and wondering how you can set up a Robots.txt file for your blog? Well, let me assure you that you are mistaken. This guide is designed to be the simplest and most comprehensive overview of Robots.txt, covering its basic features and providing you with the understanding you need to set up your own Robots.txt file in the future.

Feeling curious? Let's get started!

Understanding the Robots.txt File

What is a Robots.txt file?

The Robots.txt file is a simple text file that lives on your website's server. Its main job is to talk to search engine crawlers and tell them which pages and files they can visit and which ones to stay away from. Think of it as a roadmap for search engines, helping them navigate and make sense of your website's layout and content.

By using the Robots.txt file effectively, you can ensure that search engines understand your site better, leading to improved visibility and better SEO performance.

Why is it important for SEO?

The Robots.txt file is crucial for optimizing your website for search engines. By using it strategically, you can control how search engine crawlers navigate your content, which directly affects your search engine rankings. Here's why the Robots.txt file is important for SEO:

- Control Crawling: With the Robots.txt file, you decide which parts of your site search engines should crawl and which ones to exclude. This helps prioritize valuable content and prevents duplicate or irrelevant pages from being indexed.

- Maximize Crawl Budget: Search engines allocate limited resources to crawl your site. By optimizing your Robots.txt file, you ensure that crawlers focus on the most important pages, making the most of their crawl budget.

- Protect Privacy: You can safeguard sensitive information by blocking access to directories that should not be publicly visible. This ensures that confidential files or private sections of your site remain hidden from search engine crawlers.

How search engine crawlers utilize the Robots.txt file

Search engine crawlers, also known as robots or spiders, rely on the information provided in the Robots.txt file to navigate and index websites.

When search engine crawlers visit your website, they first look for the Robots.txt file in the root directory. Once found, they interpret the directives specified within the file to determine which pages or directories to crawl and which ones to exclude.

I hope you have gained some information about the Robots.txt file for better understanding. Now, we will guide you in creating a perfect Robots.txt file for your Blogspot blog. This file will not only save you time in getting your articles indexed quickly but also contribute to a remarkable 96% boost in your SEO.

Creating the Perfect Robots.txt File for your Blogger SEO

Now, onto the most exciting part! You will gain access to an incredibly useful Robots.txt file for your Blogspot blog. This file will undoubtedly save you time and ensure your articles are indexed correctly.

Custom Robots.txt File for your Blogger

Let me tell you that there is no "perfect" Robots.txt file that fits every website. However, the Robots.txt file I'll be sharing is designed to help beginners reduce unnecessary issues and facilitate quick indexing of their articles once published. So, let's take a look at it:

Let's start by adding the simplest Robots.txt file possible.

User-agent: * Disallow: Allow: / Sitemap: https://yourblogname.blogspot.com/sitemap.xml

This is the simplest and default Robots.txt file that you can use for your Blogger platform. It does not generate any errors on your blog, and it allows all search engines to crawl your blog.

Now, let's upgrade our Robots.txt file to specifically target Googlebot for crawling our website. Additionally, we will disallow certain non-required pages for our blog.

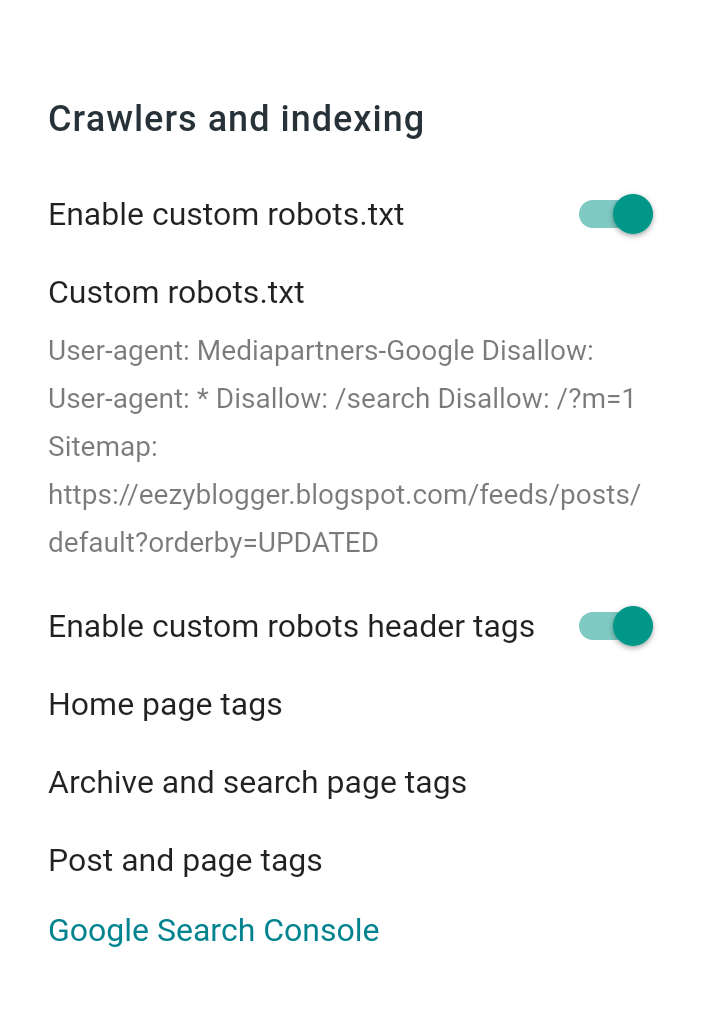

User-agent: Mediapartners-Google Disallow: User-agent: * Disallow: /search/ Disallow: /?m=1 Allow: / Sitemap: https://yourblogname.blogspot.com/feeds/posts/default?orderby=UPDATED

This Robots.txt file works perfectly for every Blogspot blog. It automatically excludes LABEL tags and stops indexing '?m=1' parameter links, which is beneficial. Remember, to change 'yourblogname.blogspot.com' with your own domain.

How To Add Custom Robots.txt On Blogger

Adding the above Custom Robots.txt file to your Blogspot blog is quite simple. Just follow these steps:

- Access the Blogger Dashboard and navigate to the Settings menu.

- Click on the "Search Preferences" tab within the Settings menu.

- Scroll down to the "Crawlers and Indexing" section.

- Click on the "Edit" link next to the "Custom robots.txt" option.

- Enable the custom robots.txt file by checking the provided checkbox.

- Save your changes to enable the robots.txt option for your blog.

- Click on the "Edit robots.txt" link to create and customize your Robots.txt file.

- Now, simply copy and paste the Robots.txt file from the above code boxes of your choice. Afterward, click "Save" to apply the changes successfully.

That's it! By following these steps, you'll successfully add the Custom Robots.txt file to your Blogspot blog and have control over how search engines crawl and index your content.

Understanding the Robots.txt File Syntax

I have provided you with the best Robots.txt file for your Blogger platform. However, I understand that some users may not be familiar with the terms used in the file. Let's take a closer look at what these terms mean within the Robots.txt file.

- User-agent: This directive specifies the search engine crawler or user agent to which the following directives apply. For example, "User-agent: Googlebot" refers to Google's crawler.

- Disallow: This directive tells search engine crawlers which pages or directories should not be crawled or indexed. For instance, "Disallow: /private/" instructs crawlers to exclude the "/private/" directory.

- Allow: This directive overrides a Disallow directive and permits access to specific pages or directories. For example, "Allow: /blog/" allows crawlers to access the "/blog/" directory even if other directories are disallowed.

- Sitemap: This directive specifies the location of your website's XML sitemap, which helps search engines understand the structure and hierarchy of your site.

That's it! Now it's time to check if your Robots.txt file is working correctly on your blog. Let's proceed ahead and ensure everything is in order.

Testing your Robots.txt File

This step is crucial as it will confirm whether your Robots.txt file is working correctly on your blog, which is essential.

To test your robots.txt, go to the Google Search Console, navigate to the "Crawl" section, and select "robots.txt Tester." Here, you can validate and submit your Robots.txt file. Click the "Submit" button to notify search engines of the changes made to your Robots.txt file.

That's it! You're all done. Now you can sit back, relax, and let your blog automatically index your articles properly.

FAQs

Why is the Robots.txt file important for Blogger SEO?

The Robots.txt file guides search engine crawlers on which pages to access and which to exclude, optimizing your website's visibility and search engine rankings.

How can I create a Robots.txt file for my Blogger platform?

You can create a Robots.txt file by following specific syntax guidelines and placing it in the root directory of your Blogger platform. Tools like Google Search Console can help verify its effectiveness.

What should I include in my Robots.txt file to boost SEO?

Ensure important pages are accessible to search engines while blocking duplicate content, sensitive information, or irrelevant directories. Utilizing wildcards and disallowing unnecessary crawl areas can further enhance SEO.

Are there any advanced techniques to maximize SEO benefits?

Yes, optimizing crawling frequency, leveraging Robots.txt for mobile SEO, and using the "Crawl Delay" directive can provide advanced SEO benefits for your Blogger platform.

How can I monitor the effectiveness of my Robots.txt file?

Regularly monitor crawl errors, use testing tools to verify proper functioning, and stay updated with search engine guidelines to ensure your Robots.txt file continues to boost SEO effectively.

Conclusion

By now, you have gained a comprehensive understanding of the importance of the Robots.txt file in optimizing your Blogger platform for SEO. Armed with the knowledge and guidelines provided in this article, you can create a perfect Robots.txt file that will propel your website's visibility, attract more organic traffic, and boost your search engine rankings by an astounding 96%.

Remember to periodically review and update your Robots.txt file to ensure its effectiveness in adapting to evolving search engine algorithms. Embrace this powerful SEO tool and unlock the full potential of your Blogger platform. Start creating your perfect Robots.txt file today and watch your blog thrive in the digital landscape.

If you need any assistance, please feel free to contact us or leave a comment, and we will get back to you as soon as possible. As Eezy Blogger, we strive to make it easy for people to find us online, so please share our website with your fellow bloggers to help us reach more people.

Wishing you a pleasant day! 😊 We look forward to your next visit.